CUSTOMER SUPPORT DASHBOARD T-MOBILE INTERNAL REPRESENTATIVES

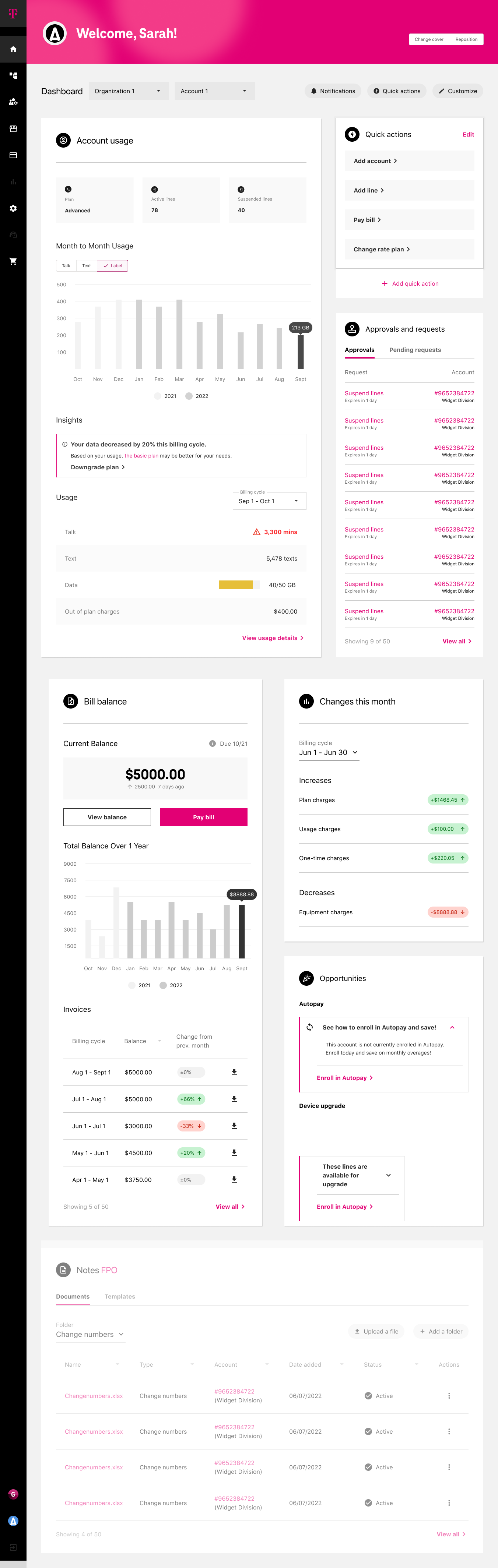

CARE DASHBOARD | T-Mobile

Project Description

COMPANY

T-Mobile for Business

ROLE

Lead Designer

Product Designer

Workshop - Co-lead

OVERVIEW

PROCESS

User Research

Competitive Analysis

Wireframing

The Care organization relied on fragmented reporting tools that evolved without governance or alignment. Metrics were duplicated, dashboards varied by team, and effort often outweighed measurable impact.

This project focused on restructuring the analytics ecosystem into a modular, decision-driven dashboard framework that reduced subjective feedback loops and introduced scalable evaluation criteria.

CORE PROBLEM

Reporting evolved without system governance

Metrics lacked canonical definitions across teams

Visualization choices were inconsistent

Effort was not tied to measurable impact

Feedback loops were opinion-driven

The system optimized presentation — not decisions.

The goal was to understand:

Core responsibilities and day-to-day workflows

Tools currently used to support customer communication

Pain points within existing systems

Current Account Hub usage patterns

We also examined how Care interacts with adjacent roles (e.g., TEM, IT Equipment Manager, Business Owner) and how those dynamics impact issue resolution and customer outcomes.

THE GOAL

Reporting evolved without system governance

Metrics lacked canonical definitions across teams

Visualization choices were inconsistent

Effort was not tied to measurable impact

Feedback loops were opinion-driven

The system optimized presentation — not decisions.

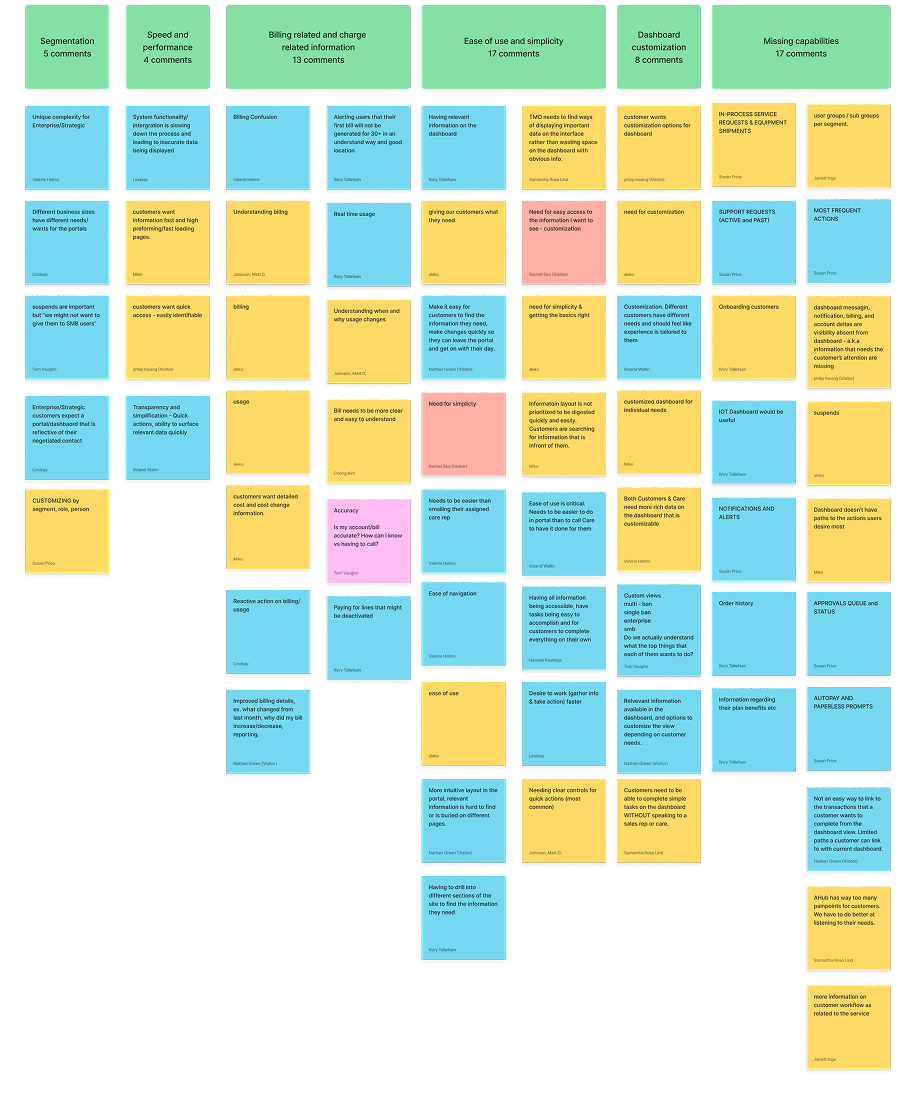

Card sorting Effort vs. Value

Widget Audit & Gaps

As part of discovery, Care audited existing dashboard widgets to determine:

Which widgets supported their needs

Which were missing or incomplete

Opportunities for new or revised components

Special attention was given to collaboration features such as Notes:

Visibility and privacy controls

Sharing permissions

Cross-role accessibility

TEAM

UX/UI

Accessibility

Development team

3rd Party Vendor

Project Management

Business Owners

Voting on priorities

Workflow & Escalation Context

We explored:

How Care supports customers when they are blocked from achieving goals

Common issue resolution patterns

What information Care needs immediate access to during live interactions

Process

Iteration: From Exploration to Convergence

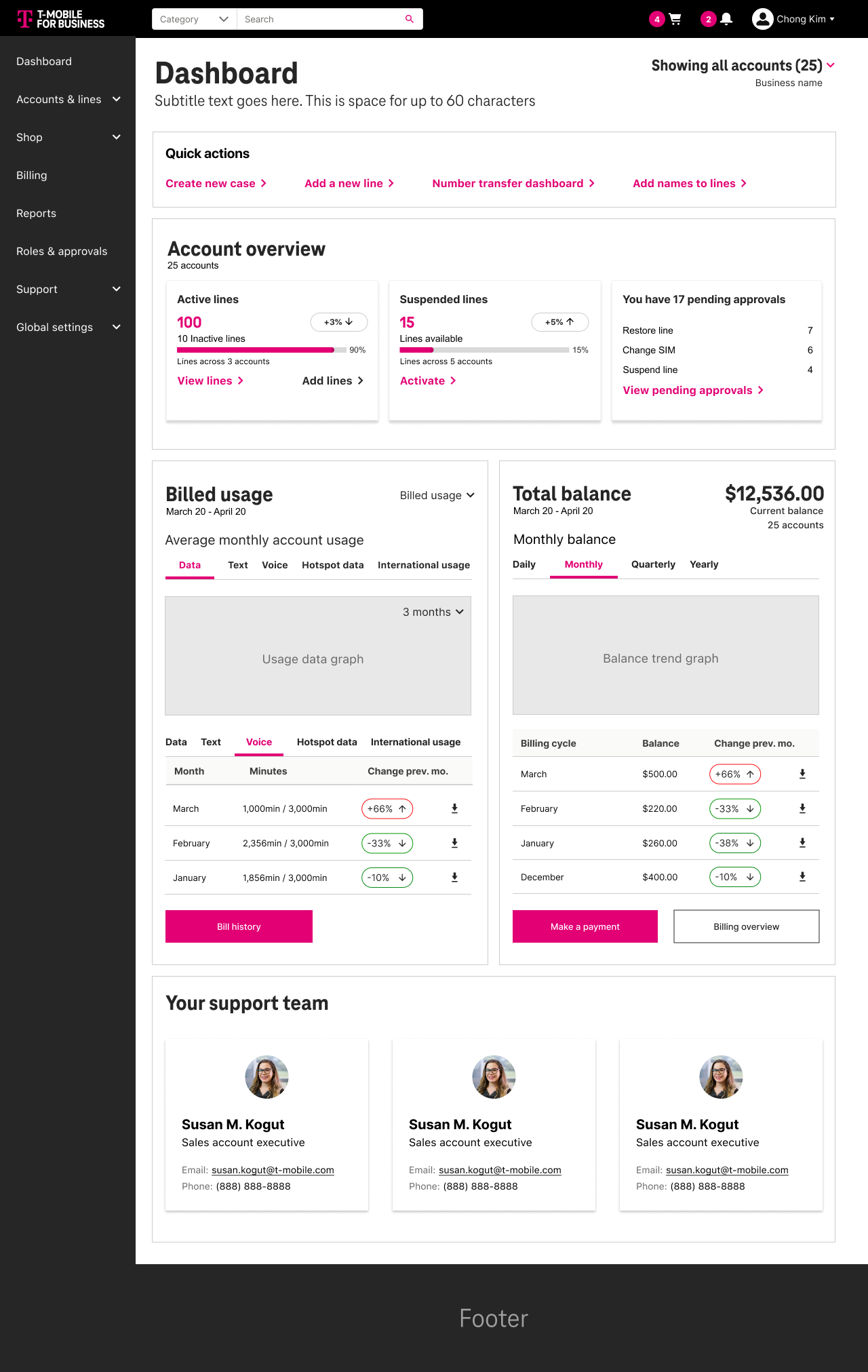

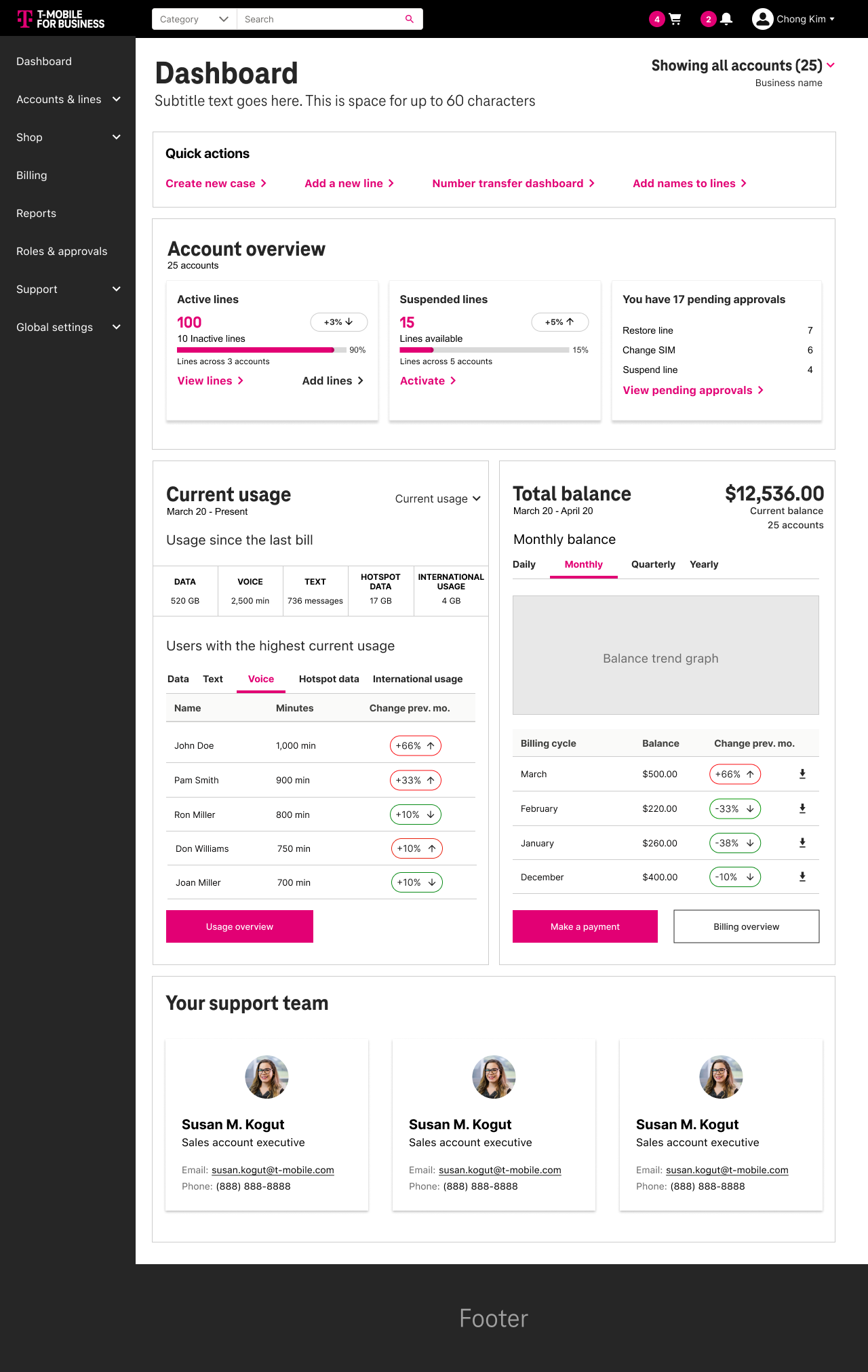

While designing the dashboards, in testing we realized that Salesforce could not handle the backend. Even though the goal was to surface 4 customizable dashboards for different users, we opted for speed. A pain point that had hurt the legacy platform.

Round 6 comp

What changed between round 5 & 6

The back end of Salesforce could not handle the data API ingestion and was lagging the site. So instead of have 4 customizable dashboards, we simplified and made individual landing pages for the widgets that lived on the dashboard.

So instead of living on the dashboard they got push from L0 to L1 landing pages.

Round 6 comp

Round 5 comp

Solutions

01 | Discovery — Exposing Structural Gaps

I conducted stakeholder interviews and workflow mapping to understand how reporting decisions were made across teams. Findings revealed:

Redundant metric definitions

Disconnected dashboard ownership

Charts selected based on familiarity, not suitability

No clear tie between reported metrics and operational decisions

High effort spent producing low-impact views

The core issue was not UI.

It was structural misalignment.

02 | Synthesis — Canonicalizing Pain Points

Rather than accumulating feedback, I consolidated findings into a canonical pain-point framework.

This reduced duplicated narratives and allowed us to isolate systemic patterns instead of isolated complaints. Key themes:

Effort vs Impact imbalance

Visualization misuse

Lack of governance standards

Reporting without accountability loops

This created alignment before design began.

03 | Framework Definition — Designing the Modular System

Instead of redesigning screens, I designed:

A modular dashboard architecture

Standardized visualization patterns

Reusable card structures

Clear metric grouping logic

Evaluation criteria for chart effectiveness

This shifted discussion from:

“What looks right?” TO “What drives decisions?”

04 | Validation — Prototype & Iteration

An internal prototype was developed to:

Test modular consistency

Validate metric grouping logic

Reduce chart variation

Demonstrate scalable governance

Stakeholder feedback showed increased clarity in:

Performance visibility

Effort allocation

Data interpretation consistence

Although the full implementation was not completed, the framework established a scalable model for analytics governance.